Every now and again, there comes a technology so indistinguishable from magic that crowds just sit back and wonder whether civilization around us will ever be the same again.

Extended reality is unquestionably the "closest thing to magic" in this decade, and the good news is that it has finally passed its pre-diluvian phase. It is now entering the mainstream and inundating shopping, gaming, media, robotics, and possibly any interactive medium - with its indomitable influence.

For more than seven years, Avataar has been at the forefront of this revolution, witnessing its unprecedented impact.

Making 3D Creation Easy and Available to All

At Avataar, we have been working to establish a new paradigm of visual discovery to provide shoppers with a more seamless shopping experience.

A persistent challenge in making 3D universally accessible is that although manually created 3D assets are photorealistic, they are arduous to create and require uncompromised expertise from professional 3D designers.

This makes it challenging to scale for all SKUs, encouraging shoppers to make 3D purchase decisions mostly based on 2D images.

At Avataar, we have been working at the intersection of deep learning and computer graphics to make 3D assets easy and available for everyone.

Developing a 3D camera, and extending it far beyond by making it nimble while retaining its agility is a part of the plan. This blog skims over the breadth of technical problems that we’re actively pursuing.

Neural Radiance Fields for Quality and Scale

We represent 3D geometry as an implicit neural function. It has recently emerged as a powerful representation for 3D, encoding a high-quality surface with intricate details into the parameters of a deep neural network. This leaves us with an optimization problem to solve - fitting an implicit neural surface to input observations.

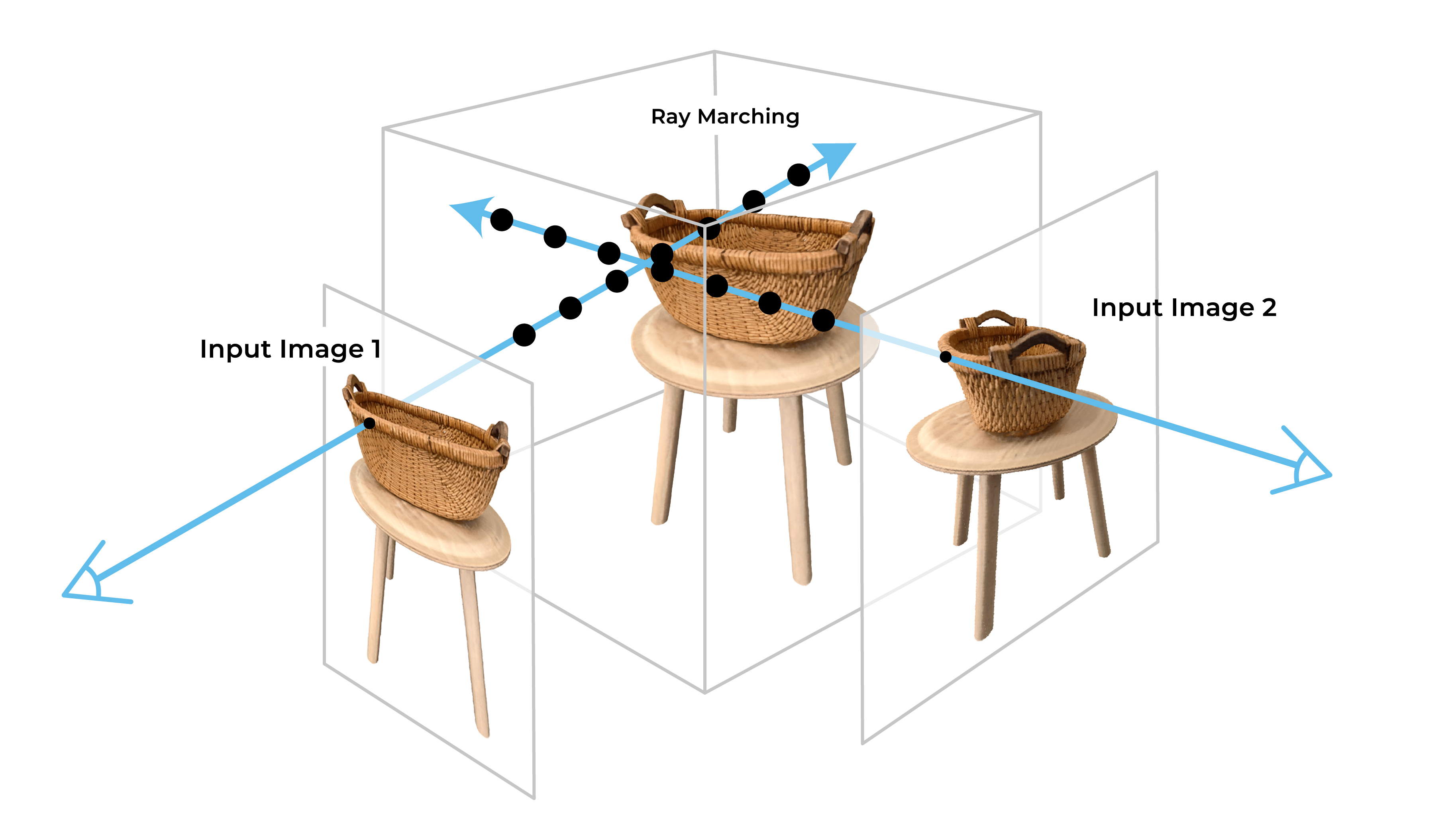

This problem is fundamentally tackled by the NeRF (Neural Radiance Fields) based methods, first introduced by the seminal work of Milendhall et al (2020).

Technically, we use a set of input images taken from 3 circular trajectories of varying heights from our capture app, the NeRF algorithm shoots rays corresponding to pixels from our input images, sampling 5D coordinates (A 3D X, Y, Z point and a 2D viewing direction) on the rays, passing through a coordinate-based neural network, obtaining density values, and colors for each point in the ray.

Finally, borrowing long-standing principles of volumetric rendering, the method integrates color values over the ray and gets a final pixel value for each ray.

This entire process is iteratively learned via gradient descent, forming a continuous representation and allowing us to do novel view synthesis.

Making NeRF’s hyper realistic for real world objects, across categories and surfaces

While this learned 3D representation of the object is robust, potent, and vastly game-changing in itself, it is not the messiah.

Training times are sluggish, and real-world captures come with their own idiosyncrasies, capture trajectories, object surfaces, room lighting, the opacity of the object, and the size of the object - all need to be modeled and accounted for.

For consumers to make purchase decisions, the 3D representations must be accurate and photorealistic. Our model has now been used on thousands of objects in the wild across a variety of real-world conditions, helping the model get better with time.

We have also worked meticulously to create an omni-flexible capture app that guides the user while capturing custom objects in the real world.

Creating explicit Mesh representations with NeRF

We have also successfully been able to convert these implicit surfaces into high-quality surface representations to accurately reconstruct discrete triangular meshes, along with their corresponding texture patches.

Our hybrid architecture consists of an explicit voxel representation with an implicit surface representation.

This architecture combines the benefits of an explicit representation, which allows for manipulation of the scene, with the representational power of an implicit network. Having mesh outputs allows us to split and edit scenes in traditional 3D software like Blender or Maya.

Our focus has been to make the output meshes editable by 3d artists so that they can be used for downstream tasks such as enhancement, animation, etc.

To model surface lightning, we use BRDF measurements, which describe the angle-dependent optical scatter from these surfaces and can roughly illustrate what the surface may look like under different lighting conditions, as well as disentangle these surfaces into diffused and specular forms.

While we are able to generate photorealistic 3D representations, we also need to render them in real-time on smartphones and web devices. We have built our renderer using custom compute shaders combined with our efficient ray casting-based rendering algorithm, enabling high FPS neural rendering in 3D and AR. We are actively researching in this area to render large scenes in real-time.

Extending the NeRF universe

Finally, we are also creating an editing/creator interface to help sellers enhance and build over the generated 3d representations.

This includes two major tasks.

1. Disentangling the neural implicit space to enable users to edit elements, including color and texture change, floor mapping, part selection, and scene manipulation.

2. Building a set of smart tools using generative models (eg-diffusion models) to go from single/sparse images to 3d. Plus also covers aspects such as human-in-the-loop feedback and online learning to help sellers build better products and virtual room experiences.

Our lab is currently a brimming hotbed at the intersection of highly challenging graphics and deep learning problems, coupled with our vision. It’s a special place from where we aim to shape what the creative future around us will look like.

It is our ultimate endeavor to empower creators to replace existing 2D content with much more exciting and realistic AR-based 3D visuals.

The potential of technology to significantly improve customer shopping experiences excites us.

This can enable customers to view more realistic product visualizations from eCommerce stores by simply using their phone's camera. Imagine shopping from the comfort of your home without having to worry about size, texture, look and feel, and, most importantly, without having to deal with the hassle of returns. eCommerce retailers, on the other hand, will be able to create 3D models on their own without going for photo shoots. These 3D outputs have the potential to drastically reduce photography costs.

We are excited about the prospects and will keep you updated. Watch this space for updates.